I received my B.S. degree in Statistics from Wuhan University of Technology (WHUT, 武汉理工大学). Currently, I am a Ph.D. candidate in Computational Mathematics at the School of Mathematics, South China University of Technology (SCUT, 华南理工大学), advised by Prof. Delu Zeng. I also collaborate with John Paisley (Columbia University), Junmei Yang (SCUT), Qibin Zhao (RIKEN-AIP), Jiacheng Li (SCUT), Shigui Li (SCUT), Jian Xu (SCUT / RIKEN-AIP), Shian Du (Tsinghua University).

My research focuses on deep generative modeling and density ratio estimation, with particular interests in diffusion models, normalizing flows, and stochastic interpolation. I aim to develop mathematically grounded methods for probabilistic inference.

I have published more than 10 papers at the top international AI conferences or articles with total google scholar citations 60+ (

Feel free to reach me at weichen_work@126.com! 😃

🔥 News

- 2025.10: Our paper about diffusion informer for time series modeling is accepted to Expert Systems With Applications (ESWA).

- 2025.10: Our paper about wavelet diffusion for time series modeling is accepted to IEEE TIM.

- 2025.09: Our paper about diffusion modeling acceleration is accepted to NeurIPS 2025.

- 2025.09: Our paper about normalizing flow is accepted to Pattern Recognition (PR).

- 2025.08: Our paper about diffusion models for low-level CV is accepted to Neurocomputing.

- 2025.10: Our paper about time series modeling is accepted to IEEE Transactions on Instrumentation & Measurement (TIM).

- 2025.05: Our paper about stable & efficient density ratio estimation is accepted to ICML 2025.

- 2022.02: Our paper about efficient continuous normalizing flow is accepted to CVPR 2022.

📝 Publications

Deep Generative Modeling

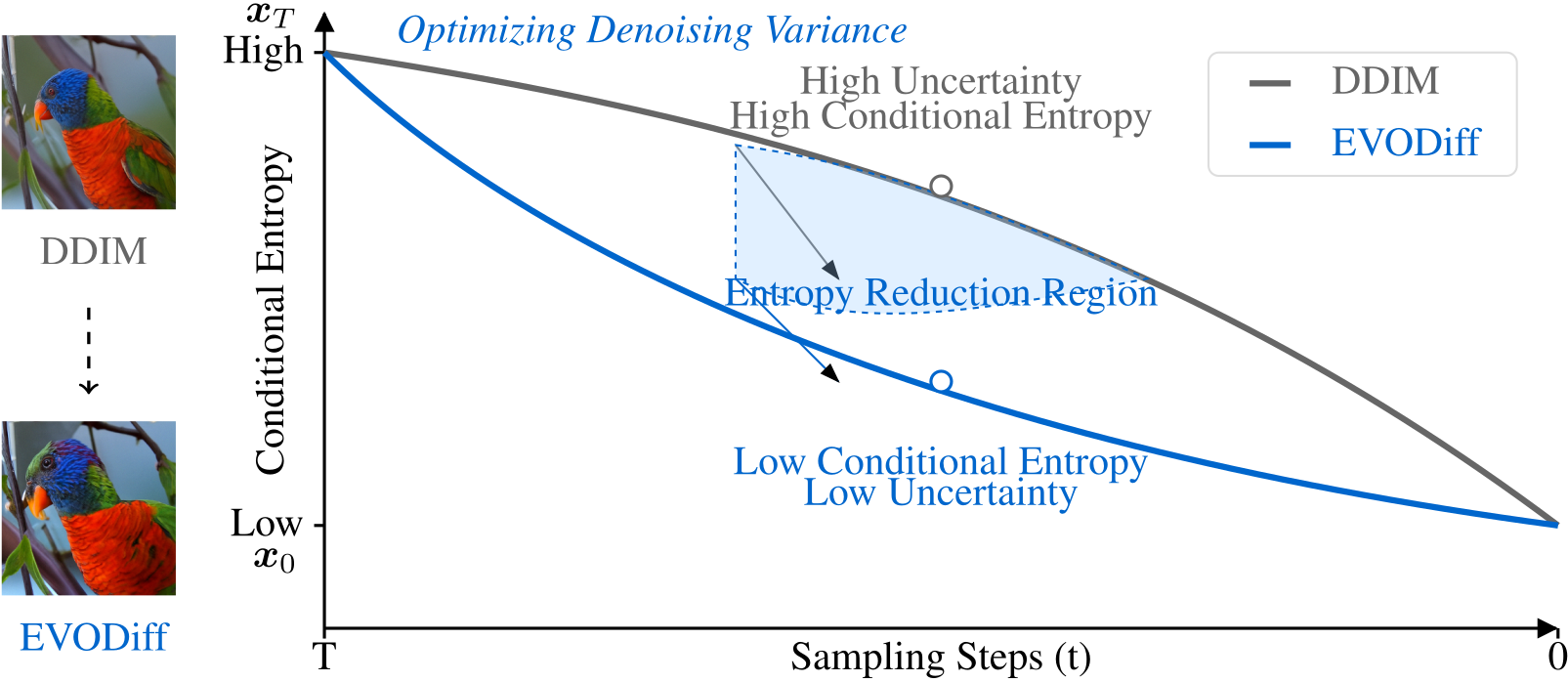

EVODiff: Entropy-aware Variance Optimized Diffusion Inference, Shigui Li, Wei Chen, Delu Zeng*

NeurIPS 2025 | Paper | Code | News🎉

- Introduces an information-theoretic view: successful denoising reduces conditional entropy in reverse transitions.

- Proposes EVODiff, a reference-free diffusion inference framework that optimizes conditional variance to reduce transition and reconstruction errors, improving sample quality and reducing inference cost.

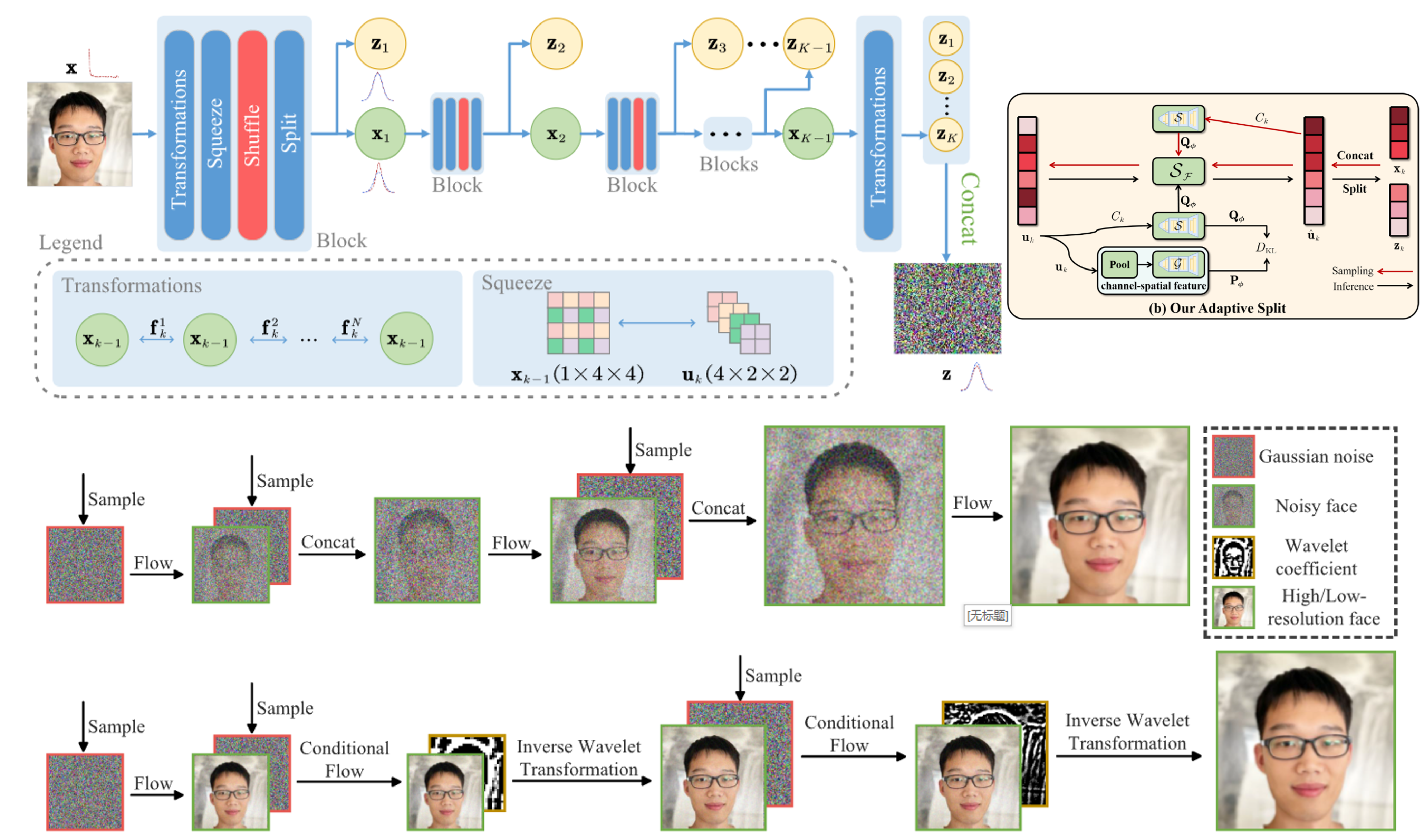

Entropy-informed weighting channel normalizing flow for deep generative models, Wei Chen#, Shian Du#, Shigui Li#, Delu Zeng*, John Paisley

Pattern Recognition 2025 | Paper | Code

- Proposes Entropy-Informed Weighting Channel Normalizing Flow (EIW-Flow), enhancing multi-scale normalizing flows with a regularized, feature-dependent operation that generates channel-wise weights and shuffles latent variables before splitting.

- Empirically achieves state-of-the-art density estimation and competitive sample quality with minimal computational overhead on multiple benchmarks.

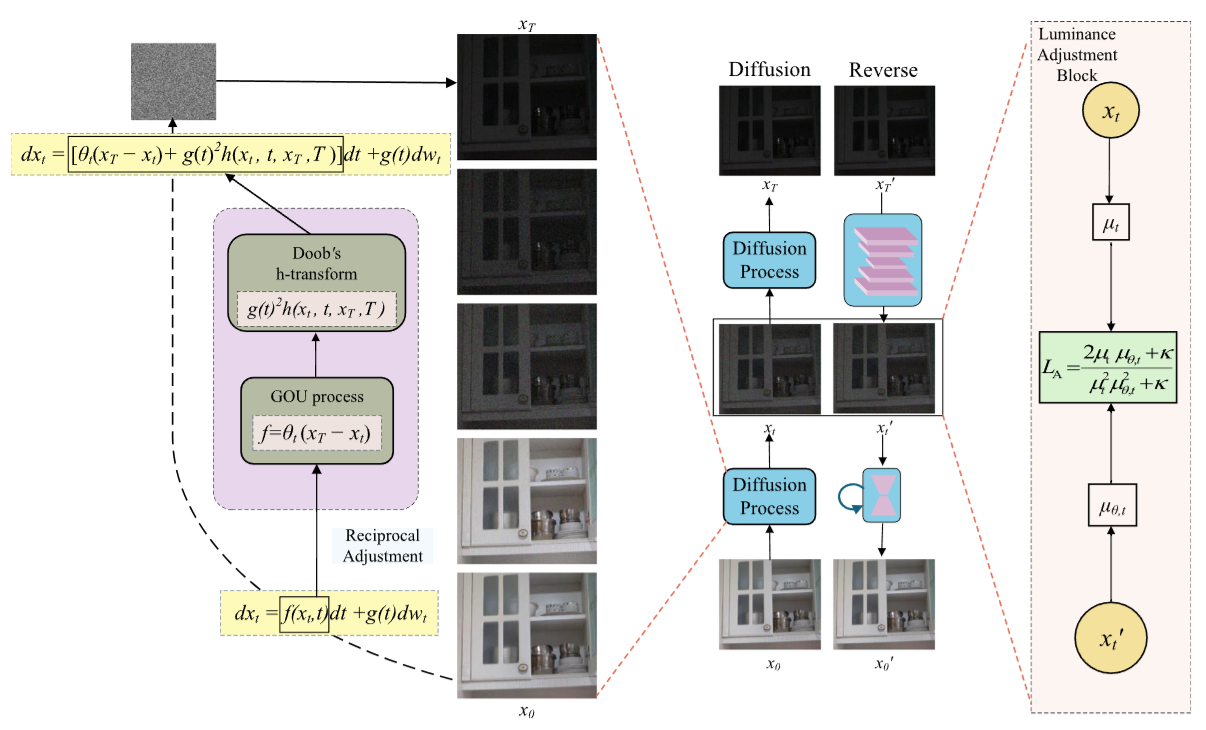

ReciprocalLA-LLIE: Low-light image enhancement with luminance-aware reciprocal diffusion process, Zhiqi Lin, Wei Chen, Jian Xu, Delu Zeng*, Min Chen

Neurocomputing 2025 | Paper

- Proposes a reciprocal diffusion process (between low-light and well-exposed images) within a score-based DDPM framework via drift adjustment, with the low-light image as an endpoint state rather than only a conditional input.

- Introduces a Luminance Adjustment Block for robust global luminance control, suppressing noise and preserving details.

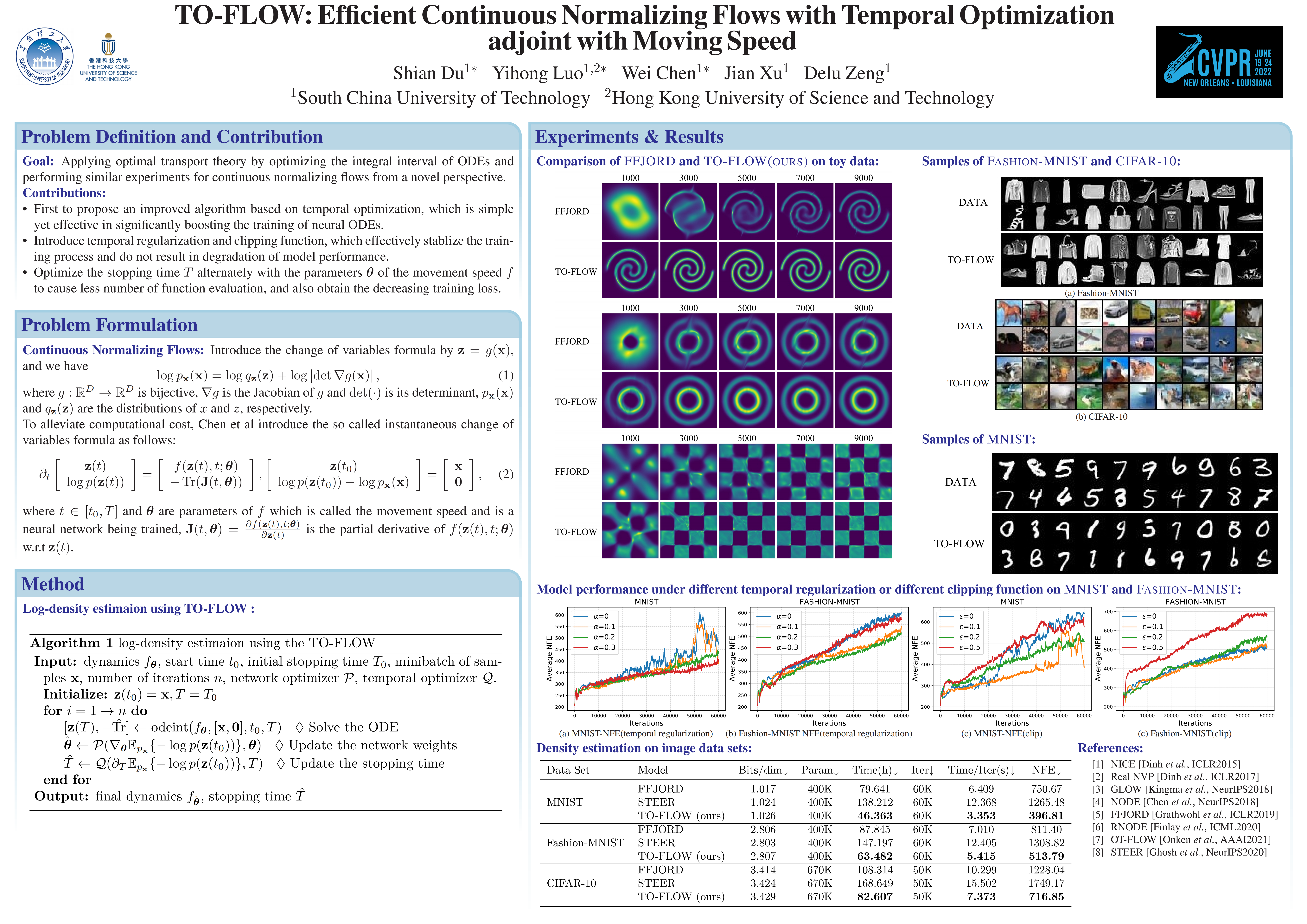

To-Flow: Efficient Continuous Normalizing Flows with Temporal Optimization Adjoint with Moving Speed, Shian Du#, Yihong Luo#, Wei Chen#, Jian Xu, Delu Zeng*

- Continuous normalizing flows (CNFs) via neural ODEs are costly to train on large datasets; To-Flow proposes temporal optimization by alternately optimizing network weights and evolutionary time (coordinate descent) with temporal regularization for stability.

- Key result: accelerates flow training by about 20% while maintaining performance.

Density Ratio Estimation

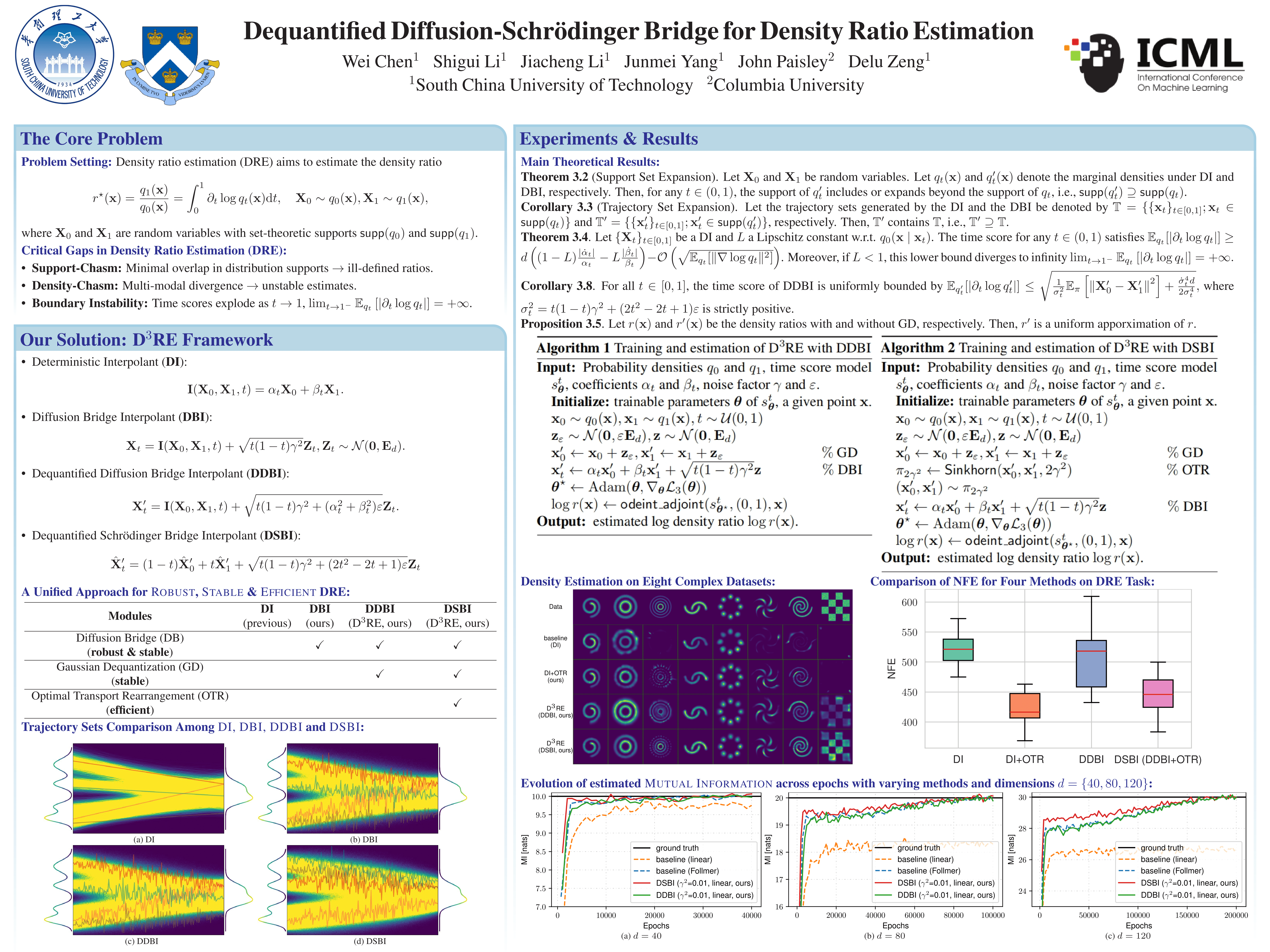

Dequantified Diffusion-Schrödinger Bridge for Density Ratio Estimation, Wei Chen, Shigui Li, Jiacheng Li, Junmei Yang, John Paisley, Delu Zeng*

- Proposes D3RE, a unified framework for robust and stable density ratio estimation under distribution mismatch (density-chasm / support-chasm), addressing instability from divergent time scores near boundaries.

- Introduces dequantified diffusion bridge interpolant (DDBI) for support expansion and stabilized time scores; further extends to dequantified Schrödinger bridge interpolant (DSBI) to enhance accuracy and efficiency.

Time Series Forecast

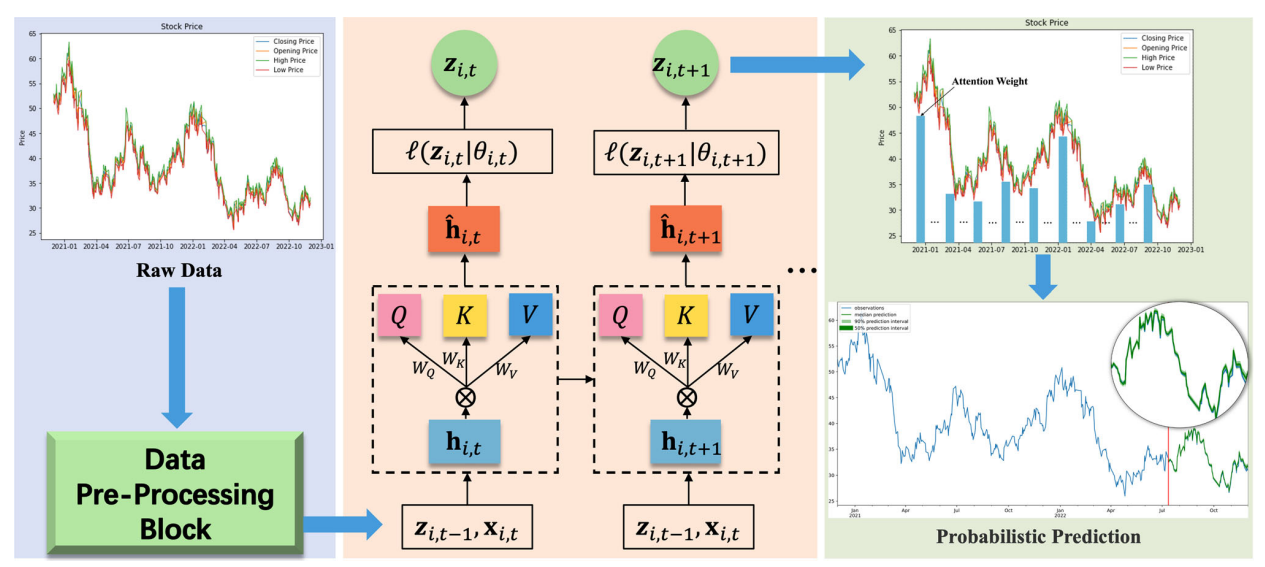

DeepAR-Attention probabilistic prediction for stock price series, Jiacheng Li, Wei Chen, Zhiheng Zhou, Junmei Yang, Delu Zeng*

Neural Computing and Applications 2024 | Paper

- Proposes a DeepAR-Attention probabilistic forecasting approach for stock price series.

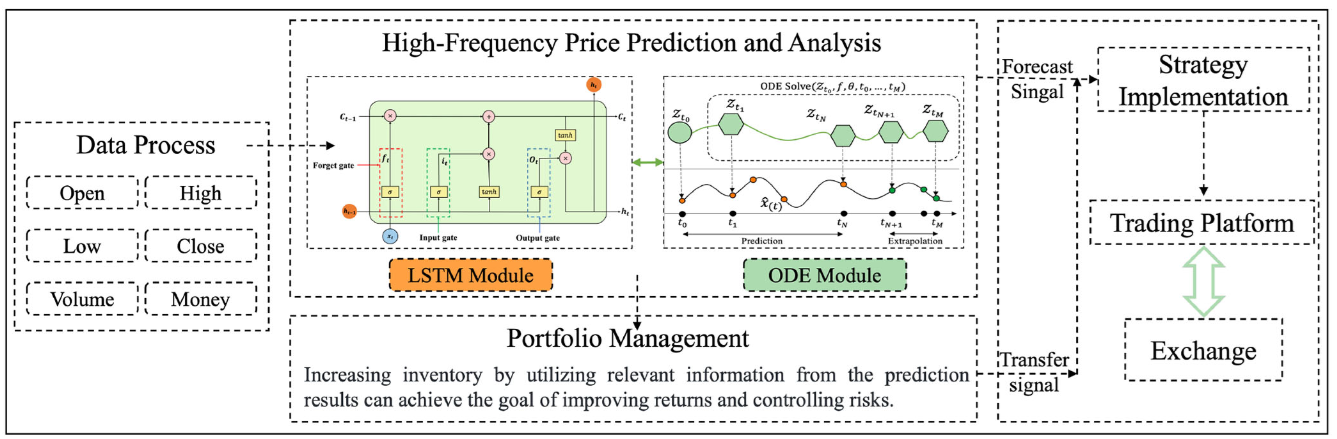

Neural ordinary differential equation networks for fintech applications using Internet of Things, Jiacheng Li, Wei Chen, Yican Liu, Junmei Yang, Delu Zeng*, Zhiheng Zhou

IEEE Internet of Things Journal 2024 | Paper

- Develops neural ODE network approaches for fintech applications in IoT settings.

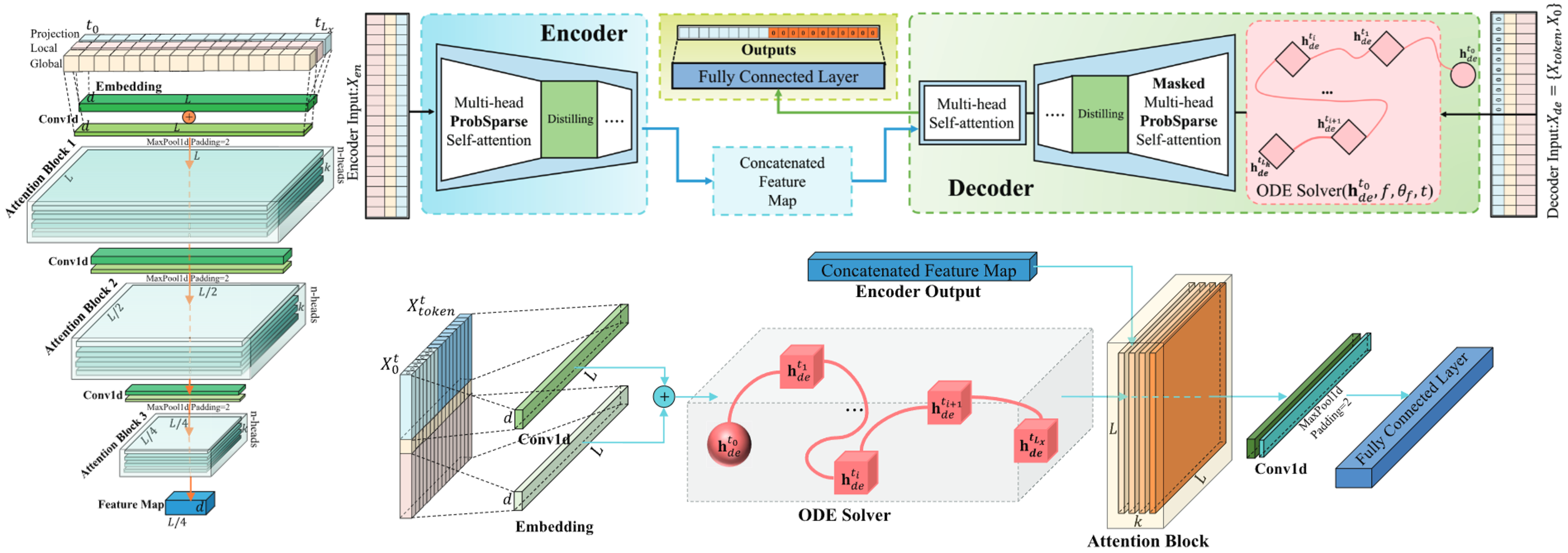

Integrating Ordinary Differential Equations with Sparse Attention for Power Load Forecasting, Jiacheng Li, Wei Chen, Yican Liu, Junmei Yang, Zhiheng Zhou, Delu Zeng*

IEEE Transactions on Instrumentation and Measurement 2025 | Paper

- Proposes EvolvInformer: integrates an ODE solver into a ProbSparse self-attention decoder to model continuous-time hidden state dynamics for nonstationary long-sequence load forecasting.

- Reports a 29.7% MSE reduction versus state-of-the-art baselines while preserving logarithmic memory complexity.

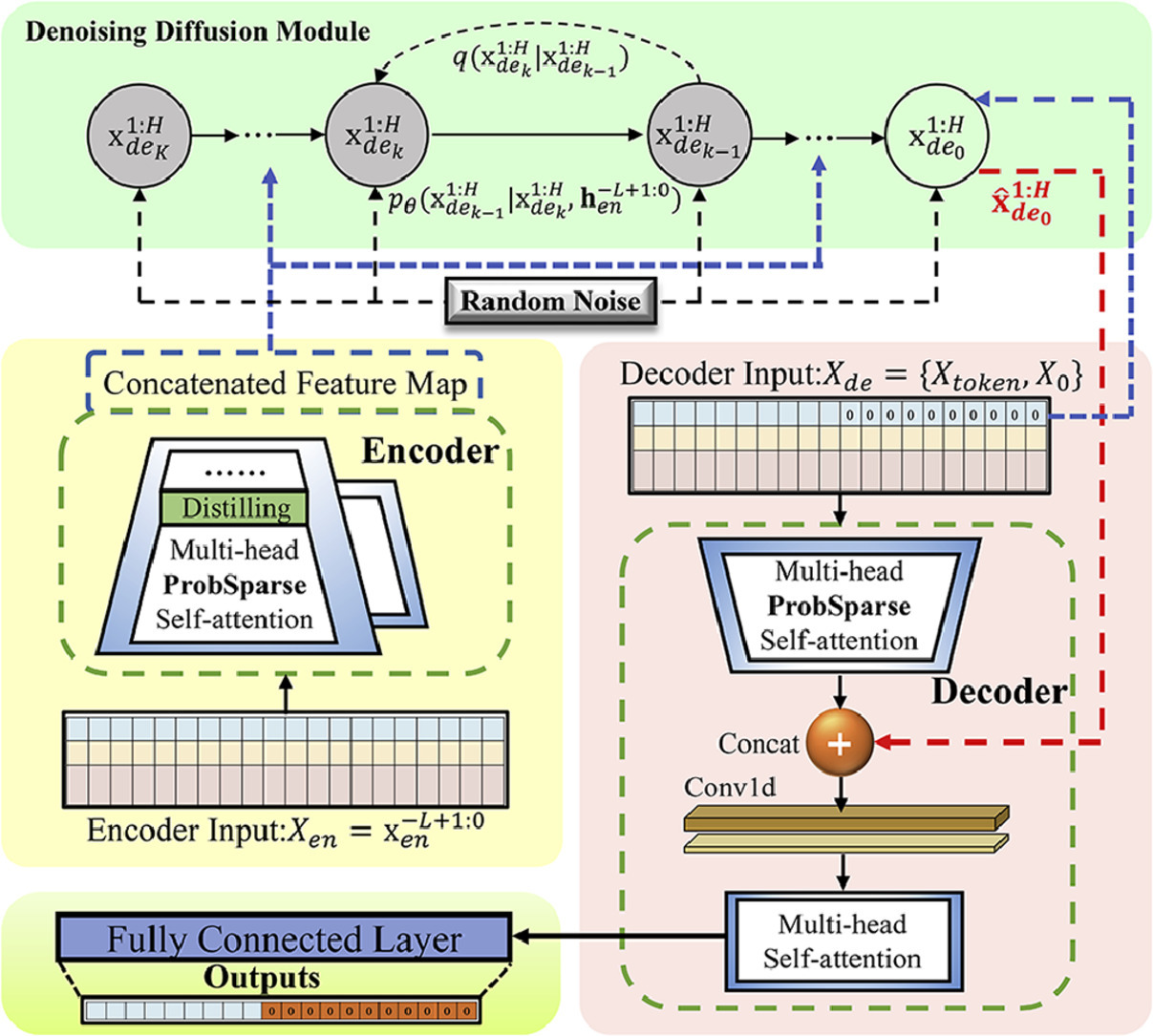

Diffinformer: Diffusion Informer model for long sequence time-series forecasting, Jiacheng Li, Wei Chen, Yican Liu, Junmei Yang, Zhiheng Zhou, Delu Zeng*

Expert Systems with Applications 2025 | Paper

- Proposes Diffinformer: combines conditional diffusion models with Informer’s ProbSparse attention distilling mechanism, incorporating diffusion outputs into the decoder to better capture long-range dependencies.

- Demonstrates consistent improvements over corresponding baselines across five large-scale datasets under limited computational resources.

🎖 Honors and Awards

- 2021.10 None.

📖 Educations

- 2022.06 - 2026.06 (now), Ph.D. Candidate in Computational Mathematics, School of Mathematics, South China University of Technology (SCUT).

- 2021.09 - 2022.06, M.S., Successive Master–Doctor Program in Computational Mathematics, School of Mathematics, South China University of Technology (SCUT).

- 2017.09 - 2021.06, B.S. in Statistics, School of Mathematics and Statistics, Wuhan University of Technology (WHUT).

💬 Invited Talks

- 2021.06, None.